DISCLAIMER:

(1) If you are already familiar with functions, variables, types, otherwise coding basics, C4CNC101 is not for you. I'd recommend taking a look at something like The Nature Of Code if you're interested in getting up and running with processing.

(2) A familiarity with digital art in general, including coordinate systems, pixels, etc will be extremely helpful. If you've ever used Photoshop, Illustrator, or any other digital art program, 2d or 3d, you should be good to go.

(3) If you're a programmer, you'll probably find tons of inconsistencies or things i'm glossing over. My goal here is not to teach programming, it's more to get people who want to get into using code as a tool to create or augment the creation of art up and running, enough to give them the foundation knowledge to research deeper if they so choose. I've done alot of thinking about this and I believe the information as I've presented it is true in spirit and in the scope of processing.

(1) If you are already familiar with functions, variables, types, otherwise coding basics, C4CNC101 is not for you. I'd recommend taking a look at something like The Nature Of Code if you're interested in getting up and running with processing.

(2) A familiarity with digital art in general, including coordinate systems, pixels, etc will be extremely helpful. If you've ever used Photoshop, Illustrator, or any other digital art program, 2d or 3d, you should be good to go.

(3) If you're a programmer, you'll probably find tons of inconsistencies or things i'm glossing over. My goal here is not to teach programming, it's more to get people who want to get into using code as a tool to create or augment the creation of art up and running, enough to give them the foundation knowledge to research deeper if they so choose. I've done alot of thinking about this and I believe the information as I've presented it is true in spirit and in the scope of processing.

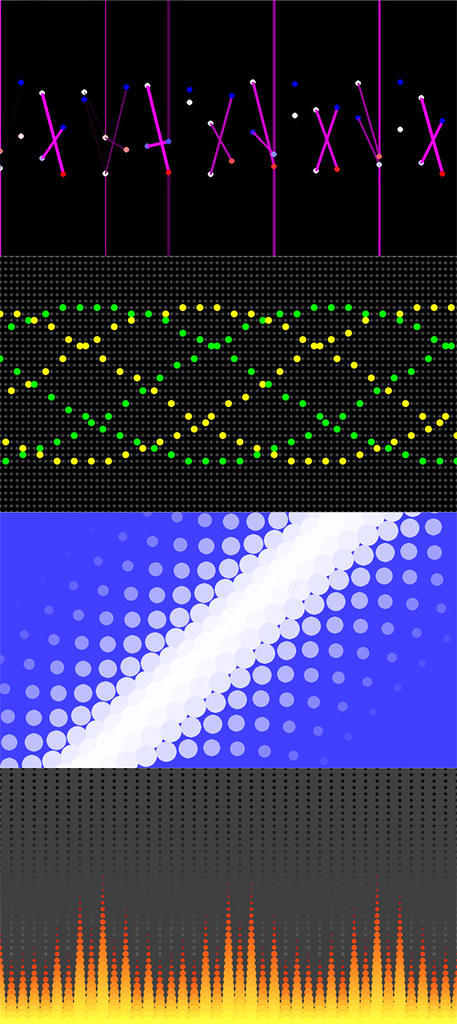

Ok, so hopefully by now you've downloaded and installed processing, signed up for an OpenProcessing account, and joined the C4CNC Classroom on OpenProcessing. The first step is really the only requirement, but i do recommend at least peeking around OpenProcessing to get an idea of what's possible. I'll warn you in advance that if you're just starting out, it can be pretty easy to get overwhelmed by the breadth and depth of content therein, but fear not! Hopefully by the time we're through these first five lessons, you'll know enough to read through some of the sketches and even build your own sketches based off of them. As I mentioned in the last post, if you come across any sketches or effects you'd like to remix, breakdown, or dive into deeper, let me know and I'll work something out for a future set of tutorials. Alright, so let's begin!

First, let's conceptualize a computer program as nothing more than a set of commands or instructions that processes information and produces results based on the specifics of the information and the commands. While that's a bit of an oversimplification, on some level this holds true for any program, from the small visualization sketches we'll be writing here, all the way up to full on operating systems like Windows or Linux. We call these instructions functions and we call the information data. So let's write our first program. Open processing and type the following function:

First, let's conceptualize a computer program as nothing more than a set of commands or instructions that processes information and produces results based on the specifics of the information and the commands. While that's a bit of an oversimplification, on some level this holds true for any program, from the small visualization sketches we'll be writing here, all the way up to full on operating systems like Windows or Linux. We call these instructions functions and we call the information data. So let's write our first program. Open processing and type the following function:

ellipse(50, 50, 50, 50);

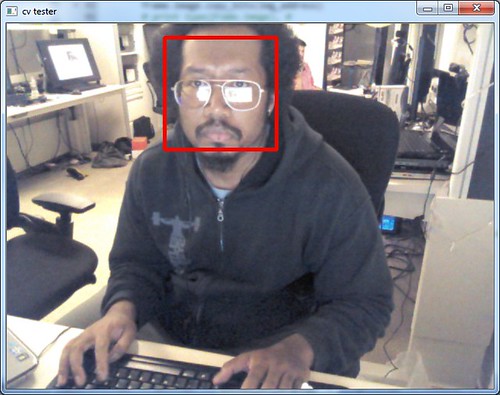

Once that's in place, press the Run button (it looks like a 'Play' button) in the upper left hand corner. Alternately, you can check out the sketch on OpenProcessing(1-1: Basic Functions), although I highly recommend you follow along by typing the code yourself to get the most out of these lessons. Either way, you should see something like the following:

CODERSPEAK: When we issue a command in a program, we say we are calling the function or making a function call, and when we provide data to a function, we say we are passing an argument (or arguments). So when we issue a command and give it some information, we are calling a function with arguments.

This may not look like much, but it's actually a valid processing sketch, so congrats. In some languages, Python for example, a single function like this could also comprise a valid and complete program, so not bad for a first step! Sure, it's not very exciting and doesn't do much, but we'll get there.

Now, let's take a moment and break down our function call. For our intents and purposes, every function call will be a name followed by a set of parentheses. If we're passing arguments to the function, they'll be between the parentheses, separated by commas. And finally, we end our function call with a semicolon, so processing knows to move on to the next function. Thus, the skeleton for any function call is:

Now, let's take a moment and break down our function call. For our intents and purposes, every function call will be a name followed by a set of parentheses. If we're passing arguments to the function, they'll be between the parentheses, separated by commas. And finally, we end our function call with a semicolon, so processing knows to move on to the next function. Thus, the skeleton for any function call is:

functionName(argument1, argument2, argument3, etc);

Recall that we started out by defining a program as a set of commands(functions) that processes information(data) to produce a result. Arguments are how we provide the data to a function. In cases where we're passing multiple arguments, each argument is used by the function to perform a specific task along the way to producing the final result. So in the case of our first sketch here, as the programmer we're telling processing to:

Draw an ellipse with a position of 50 pixels along the x-axis and 50 pixels along the y-axis, and a size of 50 pixels along the x-axis and 50 pixels along the y-axis.

Most, if not all, publicly available coding tools and environments have references that describe (some in more detail than others) what each argument does. For example, take a look at the reference page for the ellipse() function, which not only details the arguments, but also provides some useful tips on calling ellipse().

Alright, so let's practice a bit by adding a few more functions. Add another function before the ellipse() call, so your sketch contains the following function calls. Note that we're changing some of the arguments to the ellipse() call, and you should feel free to change any of the arguments to any of the functions. Experimentation is a key to learning!

size(400, 400);

ellipse(200, 200, 50, 50);

ellipse(200, 200, 50, 50);

As you can probably tell from the result, the size() function sets the size in pixels of our sketch's window. Even though both functions take a different number of arguments and produce markedly different results, you can see that they both follow the same skeleton we outlined above, i.e.:

functionName(argument1, argument2, argument3, etc);

Before we get a little more advanced, let's add a few more basic processing functions, again for practice, and also to see how we can affect what we're drawing on-screen so we can start getting an idea of the kind of drawing functionality that processing makes available to our sketches. We're going to add three more function calls in-between our size() call and our ellipse() call: background(), stroke(), and fill(). Type these functions in as presented below:

size(400, 400);

background(0, 0, 0);

stroke(255, 255, 255);

fill(0, 128, 255);

ellipse(200, 200, 100, 100);

background(0, 0, 0);

stroke(255, 255, 255);

fill(0, 128, 255);

ellipse(200, 200, 100, 100);

As the saying goes, the more things change, the more things stay the same. As we add functions, we see the results compound and the output become more complex, but in the end, all functions are called in the same manner using the same syntax. Feeling comfortable typing in functions? Then give the following exercises a try and see what you come up with. Questions? Please post them in the comments!

EXERCISE 1: Draw 5 different ellipses with different radii and in different locations. Be sure to check out the Processing Language Reference for ellipse() for more details on how the ellipse() function works. Try changing some of the arguments to the other functions as well!

Exercise 1-1

Exercise 1-1

EXERCISE 2: Take a look at the Language Reference for background(), stroke(), and fill(). Now, take the previous exercise and change the stroke and fill color for each ellipse. While you're at it, change the background color to something a bit friendlier than black, it's getting a bit gloomy in here...

Exercise 1-2

Exercise 1-2

CODERSPEAK: You might be wondering how processing knows what to do when we call any of the functions presented here. Well, most, if not all programming languages and environments come with a set of pre-existing functions and data that we use to build up our programs initially, which you'll often hear referred to as built-ins or library functions. When writing programs, you'll use a combination of both built-in functions and data, as well as functions and data you define yourself. We'll discuss this process in the next couple lessons.

REVIEW AND REFERENCE

processing Homepage

processing Language Reference

background() API Reference

ellipse() API Reference

fill() API Reference

size() API Reference

stroke() API Reference

processing Homepage

processing Language Reference

background() API Reference

ellipse() API Reference

fill() API Reference

size() API Reference

stroke() API Reference

...and end up here.

...and end up here.

Getting Started With MT Dev In Python

Getting Started With MT Dev In Python